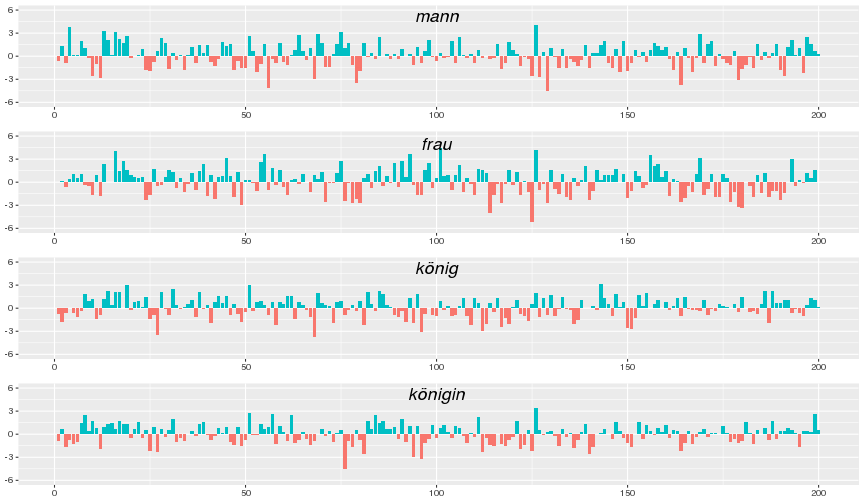

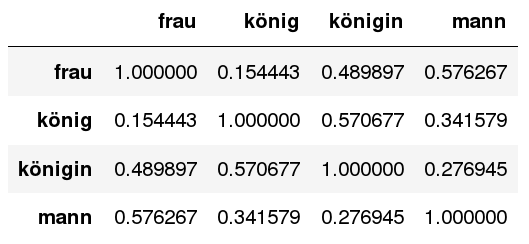

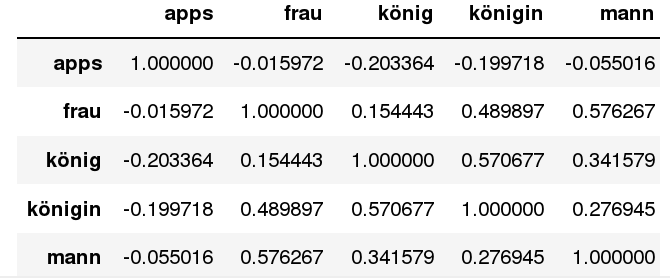

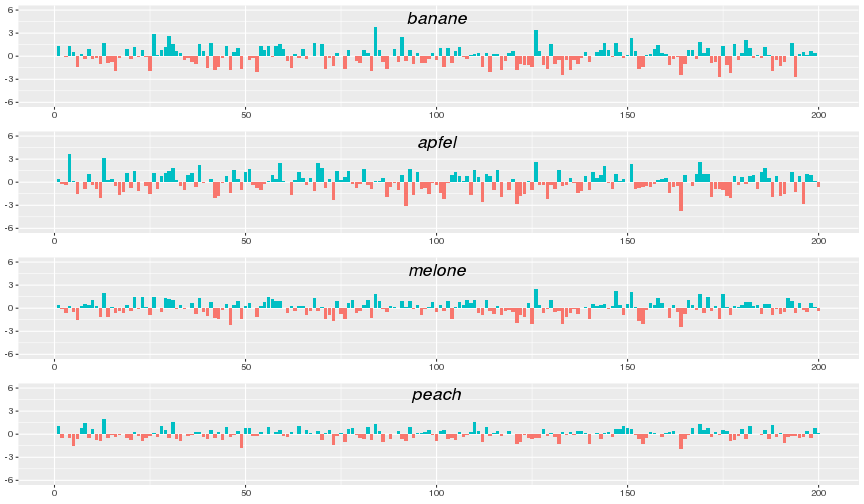

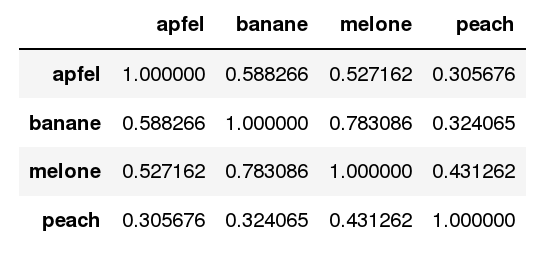

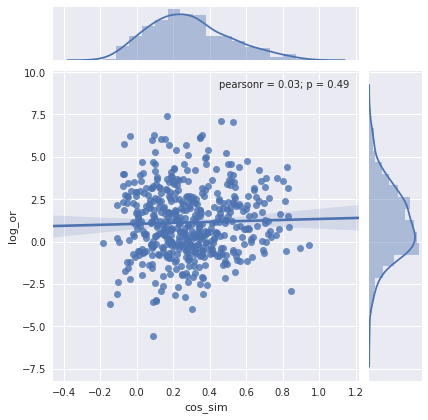

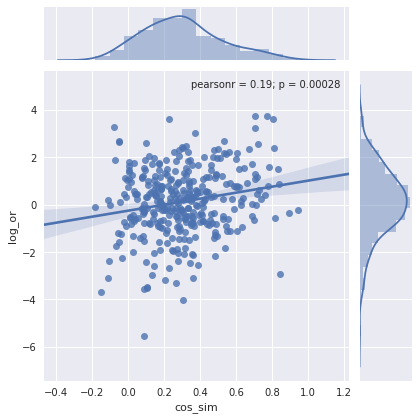

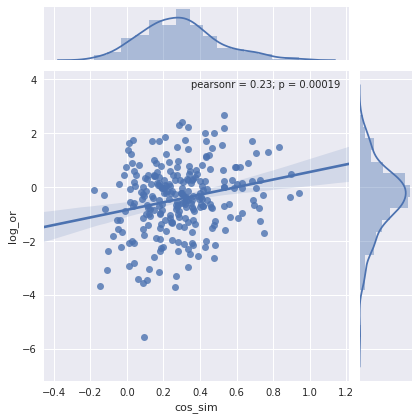

class: center, middle, inverse, title-slide # Exploring Code-switching and Borrowing Using Word Vectors ### <div class="line-block">Steven Coats<br /> English Philology, University of Oulu, Finland<br /> <a href="mailto:steven.coats@oulu.fi">steven.coats@oulu.fi</a></div> ### <div class="line-block"><br /> 14th ESSE Conference, Brno<br /> September 1st, 2018</div> --- class: inverse, center, middle background-image: url(https://cc.oulu.fi/~scoats/oululogoRedTransparent.png); background-repeat: no-repeat; background-size: 80px 57px; background-position:right top; exclude: true --- layout: true <div class="my-header"><img border="0" alt="W3Schools" src="https://cc.oulu.fi/~scoats/oululogonewEng.png" width="80" height="80"></div> <div class="my-footer"><span>Steven Coats              Exploring Code-switching and Borrowing using Word Vectors | ESSE 18</span></div> --- <div class="my-header"><img border="0" alt="W3Schools" src="https://cc.oulu.fi/~scoats/oululogonewEng.png" width="80" height="80"></div> <div class="my-footer"><span>Steven Coats              Exploring Code-switching and Borrowing using Word Vectors | ESSE 18</span></div> ## Outline 1. Code-switching and borrowing German-English in a Twitter data set 2. Data collection 3. Word vectors and embeddings 4. Identification of code-switches and borrowings 5. Tracing changes in meaning and visualizing borrowings .footnote[Slides for the presentation are on my homepage at https://cc.oulu.fi/~scoats] --- <div class="my-header"><img border="0" alt="W3Schools" src="https://cc.oulu.fi/~scoats/NewLogoRussianPNG1.png" width="80" height="80"></div> <div class="my-footer"><span>Steven Coats              Exploring Code-switching and Borrowing using Word Vectors | ESSE 18</span></div> ### Code-switching and borrowing German-English Code-switching: Use of two or more languages in an utterance/turn Lexical borrowing: Use of a second-language lexical item as a lone element within an L1 matrix (Myers-Scotton 1997) -- #### German-English code-switched tweet - Der Dauerregen ist zuviel für unseren Platz, daher müssen wir das heutige Spiel absagen. - Land under, the rain won today. So it's a rainout. .small[(The unrelenting rain is too much for our pitch, so we have to cancel today's game...)] -- #### German tweets with English borrowings - @user Gibt es schon einen Ort, also location für das Treffen? .small[(*@user Is there already a place, that is, a location for the meet-up?*)] -- - Bahhhh draußen scheint voll die Sonne. EKELHAFT. Erinnert mich daran, dass ich noch immer nicht in shape für den Sommer bin.  .small[(*Bahhhh the sun is totally shining outside. DISGUSTING. Reminds me that I'm still not in shape for summer.* )] --- ### Code-switching and borrowing German-English in a Twitter data set - Lexical borrowings can undergo semantic shift: many Anglicisms in German have meanings incommensurate with their meanings in English (Onysko 2007) -- @user Danke für den like  .small[(*@user Thanks for the like!* )] -- @user yo danke für deinen follow bro! ich weiß das zu schätzen. #RealHipHop .small[(*@user yo thanks for your follow bro! I appreciate it. #RealHipHop*)] -- - How can we trace the semantic shift of English borrowings in German? -- - By using word embeddings from large corpora that contain **borrowings** --- ### Data collection - 653,457,659 tweets with *place* metadata collected globally from the Twitter Streaming API from November 2016 until June 2017 -- - 60,683 authors of at least one German-language tweet with place metadata from Germany, Austria or Switzerland identified and all of their tweets/most recent 3,250 tweets (whichever was larger) downloaded from REST API in April 2018 -- - Retain tweets in German according to Twitter's metadata -- - 36,240,530 (59.3%) of tweets in German = 534,211,366 tokens --- ### Identifying sentences with code-switching/borrowing - Tokenize all tweets, remove punctuation, URLs, user names, hashtags, emoji -- - Match each word in each message with large German and English word lists -- Anyone in Oberwart, der am Abend nach Wien fährt & mir was abholen und mitbringen könnte? Biete Aufwandsentschädigung & ewige Dankbarkeit! .small[(*Anyone in Oberwart who is driving this evening to Vienna and can pick up something and bring it to me? I offer reimbursement for the effort & eternal thanks!*)] - 1 English word of 19: Borrowing -- Seit Anfang dieses Jahres habe ich soooo oft heißhunger auf Asiatisches Essen. This year I have so often cravings for asian #food.  - 9 English words of 21: Code-switching -- - We can distinguish code-switching from borrowing on the basis of counts of English and German types --- ### Lexica English words: - 236,736 English words from NLTK (Bird et al. 2009) -- German words: - 50k most frequent German words ([Dave 2017](https://github.com/hermitdave/FrequencyWords/tree/master/content/2016/de), Lison & Tiedemann 2016) -- Issue: Cross-contamination of word lists (built automatically from web sources) --- ### Corpus for word embeddings - Tweets with least 8 tokens, of which one or two are English words from the list - 2,488,673 of 36m tweets have borrowings (many more have code-switches!) --- ### Word embeddings - Distributional hypothesis (Harris 1968): Word meanings correspond to their aggregate contexts of use -- - Collocational information can be represented with vectors of co-occurrence probabilities within a word span -- - Similarity of collocational context (and thus meaning) for any two types in a data set (corpus) can then be quantified -- - Word2Vec algorithm (Mikolov et al. 2013) in Gensim (Řehůřek and Sojka 2010), 5-token co-occurrence span, minimum of 20 occurrences, 200-dimensional vectors -- - Vectors for 51,336 types (mostly German words, but many English words as well) --- ### Cosine similarity - For word types `\(a\)` and `\(b\)`, corresponding to vectors `\(\mathbf{a}\)` and `\(\mathbf{b}\)`: `$$\text{similarity} = \cos(\theta) = {\mathbf{a} \cdot \mathbf{b} \over \|\mathbf{a}\| \|\mathbf{b}\|} = \frac{ \sum\limits_{i=1}^{n}{a_i b_i} }{ \sqrt{\sum\limits_{i=1}^{n}{a_i^2}} \sqrt{\sum\limits_{i=1}^{n}{b_i^2}} }$$` -- - Value can range from -1 (types never occur in same context, meanings probably very different) to 1 (types occur in exact same contexts, meanings probably very similar) --- ### Vectors for *mann*, *frau*, *könig*, and *königin* <!-- --> --- ### Cosine similarity  - Cosine similarity preserves semantic relations --- ### Cosine similarity  --- ### Vectors for *banane*, *apfel*, *melone*, and *peach* <!-- --> --- ### Cosine similarity  -- - If using data that contains many borrowings, some semantic relations are preserved cross-linguistically --- <div class="my-header"><img border="0" alt="W3Schools" src="https://cc.oulu.fi/~scoats/NewLogoRussianPNG1.png" width="80" height="80"></div> <div class="my-footer"><span>Steven Coats              Exploring Code-switching and Borrowing using Word Vectors | ESSE 18</span></div> ### Research questions - Which English borrowings have meanings closest to or furthest from their German translations (i.e. have undergone semantic shift) - What role do frequency effects play? - How can we visualize the meanings of the Anglicisms in the German lexicon? --- ### Change in meaning of borrowed words - Identify all English words that occur >300 times in the 2.5m borrowing tweets - Translate the words to German using Google Translate API (507 types) - Measure cosine similarity of English borrowings to their German-language lexical equivalents - Regress cosine similarity with log odds ratio of English word frequency to German word frequency --- ### Change in meaning of borrowed words .small[ <div id="htmlwidget-8e3831cfdd19cc305bd3" style="width:100%;height:auto;" class="datatables html-widget"></div> <script type="application/json" data-for="htmlwidget-8e3831cfdd19cc305bd3">{"x":{"filter":"none","data":[["1","2","3","4","5","6","7","8","9","10","11","12","13","14","15","16","17","18","19","20","21","22","23","24","25","26","27","28","29","30","31","32","33","34","35","36","37","38","39","40","41","42","43","44","45","46","47","48","49","50","51","52","53","54","55","56","57","58","59","60","61","62","63","64","65","66","67","68","69","70","71","72","73","74","75","76","77","78","79","80","81","82","83","84","85","86","87","88","89","90","91","92","93","94","95","96","97","98","99","100","101","102","103","104","105","106","107","108","109","110","111","112","113","114","115","116","117","118","119","120","121","122","123","124","125","126","127","128","129","130","131","132","133","134","135","136","137","138","139","140","141","142","143","144","145","146","147","148","149","150","151","152","153","154","155","156","157","158","159","160","161","162","163","164","165","166","167","168","169","170","171","172","173","174","175","176","177","178","179","180","181","182","183","184","185","186","187","188","189","190","191","192","193","194","195","196","197","198","199","200","201","202","203","204","205","206","207","208","209","210","211","212","213","214","215","216","217","218","219","220","221","222","223","224","225","226","227","228","229","230","231","232","233","234","235","236","237","238","239","240","241","242","243","244","245","246","247","248","249","250","251","252","253","254","255","256","257","258","259","260","261","262","263","264","265","266","267","268","269","270","271","272","273","274","275","276","277","278","279","280","281","282","283","284","285","286","287","288","289","290","291","292","293","294","295","296","297","298","299","300","301","302","303","304","305","306","307","308","309","310","311","312","313","314","315","316","317","318","319","320","321","322","323","324","325","326","327","328","329","330","331","332","333","334","335","336","337","338","339","340","341","342","343","344","345","346","347","348","349","350","351","352","353","354","355","356","357","358","359","360","361","362","363","364","365","366","367","368","369","370","371","372","373","374","375","376","377","378","379","380","381","382","383","384","385","386","387","388","389","390","391","392","393","394","395","396","397","398","399","400","401","402","403","404","405","406","407","408","409","410","411","412","413","414","415","416","417","418","419","420","421","422","423","424","425","426","427","428","429","430","431","432","433","434","435","436","437","438","439","440","441","442","443","444","445","446","447","448","449","450","451","452","453","454","455","456","457","458","459","460","461","462","463","464","465","466","467","468","469","470","471","472","473","474","475","476","477","478","479","480","481","482","483","484","485","486","487","488","489","490","491","492","493","494","495","496","497","498","499","500","501","502","503","504","505","506","507"],["monitoring","module","active","mavericks","released","icons","phone","device","tool","unicorn","platform","conversion","application","preview","product","owner","computing","fundraising","location","login","shiny","cute","drunk","thread","pats","weird","playoff","direct","posting","against","print","poll","rapid","expertise","content","plot","blink","debut","fantastic","austrian","smarter","nutshell","animals","bubble","newsroom","reviews","electro","focus","memories","vienna","pokemon","mentions","goalie","pirate","snaps","lyrics","motion","sept","excel","grind","tram","swiss","october","moderation","posted","recruiter","recruiting","fries","hidden","employer","benefit","wear","universe","industry","hiring","consultant","growth","package","experience","awkward","working","stupid","roots","contact","relation","ship","usability","relative","proud","spotlight","career","join","corporate","greatest","thesis","noise","meets","facts","culture","study","lifestyle","outdoor","risk","featured","locations","consumer","brands","concept","screenshot","keynote","thinking","elevator","mindset","summit","invest","lecture","linkedin","seat","workplace","learn","launch","desk","policy","collaboration","healthy","equal","switch","prank","fail","thieves","mansion","react","twitch","challenge","pimp","within","prey","resident","fakes","review","official","uncharted","legacy","origins","creepy","activity","compilation","soccer","feat","qualifying","panic","racing","playground","translate","sightseeing","pranks","turtles","haul","monday","reaction","dive","plays","diary","breath","hater","comments","talks","setup","tutorial","maker","contra","draw","emirates","gamer","opening","theft","features","giveaway","players","merchandise","cities","showcase","custom","premium","finance","flashback","calling","epic","dungeon","setting","worms","surface","collection","boyfriend","replay","gear","augmented","roaming","connect","shops","steam","shared","streams","responsive","wifi","admin","reset","bundle","clients","compact","wireless","closing","asap","cache","calls","intention","leak","nordic","sites","provider","tools","advanced","leadership","posts","crowd","customer","response","messenger","wallet","guides","youth","formula","rankings","sane","viewer","ranking","remix","lyric","professional","dangerous","broadcast","goals","insane","imagine","cheat","beginner","kills","scenes","basics","keeper","ranked","stranger","closed","conference","mobility","cars","sharing","topic","host","cargo","governance","reminder","disruptive","secure","alliance","vids","voting","timeline","republic","accounts","dudes","crap","battlefield","chapter","shortcut","porn","sidekick","caps","grins","followers","warfare","effect","cringe","communities","ugly","infinite","hella","semesters","embedded","automation","suit","solution","competence","talents","supply","retail","baskets","knowledge","solutions","strategy","ghosts","comp","draft","sign","patriots","hyped","member","fuckin","legit","added","coins","casual","updated","classics","visions","romance","sail","engineer","bottle","daft","orchestra","cultural","tune","throwback","recording","sneak","seminars","excited","wrap","notebooks","course","twins","nights","lens","favorite","essentials","unlimited","tropical","himself","tail","crush","cons","shootings","communications","impression","disaster","items","odyssey","goodies","scene","path","loss","faces","giant","steps","professionals","government","innovations","transition","insight","yesterday","grid","toys","coin","drives","install","checkout","ports","effects","regular","finished","subscribe","finals","competition","rode","invite","interactive","topics","gravity","pirates","artists","daylight","parts","skill","extensions","veggie","trams","sponsored","wallpaper","props","arab","riot","inspired","images","cure","battles","greet","audit","prism","barrier","reef","recall","logistics","poetry","jokes","lines","splash","switzerland","trophy","escort","creators","origin","gears","steelers","offense","thumbs","infinity","indeed","drops","yours","shortcuts","delicious","loud","menu","cave","handmade","acts","meanwhile","gent","available","grumpy","blizzard","maze","nudes","carb","experts","achievement","dont","presentation","struggle","trainers","literally","ratio","shuffle","totally","pulled","keyword","wedges","inform","inclusive","reigns","arrival","falcons","turnover","spurs","timeout","books","console","germans","protection","awareness","detox","tricky","slots","layer","colour","gardening","rally","hosts","politics","swim","freestyle","climate","expert","pricing","oasis","famous","vital","stud","placement","pulse","present","designed","penalty","binding","meltdown","played","recon","rift","reach","certified","wednesday","nightmare","results","stable","cloudy","critical","ones","trial","movement","editorial","rent","mess","fighting","boobs","badass","managing"],["überwachung","modul","aktiv","einzelgänger","freigegeben","symbole","telefon","gerät","werkzeug","einhorn","plattform","umwandlung","anwendung","vorschau","produkt","inhaber","rechnen","spendensammlung","lage","anmeldung","glänzend","niedlich","betrunken","faden","streicheleinheiten","seltsam","spielstart","direkte","buchung","gegen","drucken","umfrage","schnell","sachverstand","inhalt","handlung","blinken","debüt","fantastisch","österreichisch","schlauer","nussschale","tiere","blase","nachrichtenredaktion","bewertungen","elektro","fokus","erinnerungen","wien","pokémon","erwähnungen","tormann","pirat","schnappschüsse","text","bewegung","september","übertreffen","schleifen","straßenbahn","schweizerisch","oktober","mäßigung","gesendet","personalvermittler","rekrutierung","fritten","versteckt","arbeitgeber","vorteil","tragen","universum","industrie","mieten","berater","wachstum","paket","erfahrung","peinlich","arbeiten","blöd","wurzeln","kontakt","beziehung","schiff","benutzerfreundlichkeit","relativ","stolz","scheinwerfer","werdegang","beitreten","unternehmen","größte","these","lärm","trifft","fakten","kultur","studie","lebensstil","draussen","risiko","gekennzeichnet","standorte","verbraucher","marken","konzept","bildschirmfoto","grundton","denken","aufzug","denkweise","gipfel","investieren","vorlesung","verlinkt","sitz","arbeitsplatz","lernen","starten","schreibtisch","politik","zusammenarbeit","gesund","gleich","schalter","streich","scheitern","diebe","villa","reagieren","zucken","herausforderung","zuhälter","innerhalb","beute","bewohner","fälschungen","rezension","offiziell","unbekannt","erbe","ursprünge","gruselig","aktivität","zusammenstellung","fußball","kunststück","qualifizieren","panik","rennen","spielplatz","übersetzen","besichtigung","streiche","schildkröten","schleppen","montag","reaktion","tauchen","theaterstücke","tagebuch","atem","hasser","bemerkungen","gespräche","konfiguration","anleitung","hersteller","kontra","zeichnen","emirate","spieler","öffnung","diebstahl","eigenschaften","hergeben","spieler","waren","städte","vitrine","brauch","prämie","finanzen","rückblende","berufung","epos","verlies","rahmen","würmer","oberfläche","sammlung","freund","wiederholung","ausrüstung","erweitert","wandernd","verbinden","geschäfte","dampf","geteilt","ströme","ansprechend","w-lan","administrator","zurücksetzen","bündeln","kunden","kompakt","kabellos","schließen","schnellstmöglich","zwischenspeicher","anrufe","absicht","leck","nordisch","websites","anbieter","werkzeuge","fortgeschritten","führung","beiträge","menge","kunde","antwort","bote","brieftasche","führer","jugend","formel","ranglisten","gesund","zuschauer","rang","remixen","lyrisch","professionel","gefährlich","übertragung","tore","wahnsinnig","vorstellen","betrügen","anfänger","tötet","szenen","grundlagen","pfleger","rangiert","fremder","geschlossen","konferenz","mobilität","autos","teilen","thema","gastgeber","ladung","führung","erinnerung","störend","sichern","allianz","videos","wählen","zeitleiste","republik","konten","kerle","mist","schlachtfeld","kapitel","abkürzung","porno","kumpel","kappen","grinst","anhänger","krieg","bewirken","kriechen","gemeinschaften","hässlich","unendlich","hallo","semester","eingebettet","automatisierung","passen","lösung","kompetenz","talente","liefern","verkauf","körbe","wissen","lösungen","strategie","geister","komp","entwurf","schild","patrioten","gehypt","mitglied","verdammt","legitim","hinzugefügt","münzen","beiläufig","aktualisiert","klassiker","visionen","romantik","segel","ingenieur","flasche","bekloppt","orchester","kulturell","melodie","rückschritt","aufzeichnung","schleichen","seminare","aufgeregt","wickeln","notizbücher","kurs","zwillinge","nächte","linse","favorit","wesentliches","unbegrenzt","tropisch","selbst","schwanz","zerquetschen","nachteile","schießereien","kommunikation","eindruck","katastrophe","artikel","odyssee","leckereien","szene","pfad","verlust","gesichter","riese","schritte","profis","regierung","innovationen","übergang","einblick","gestern","gitter","spielzeuge","münze","fährt","installieren","auschecken","häfen","auswirkungen","regulär","fertig","abonnieren","finale","wettbewerb","ritt","einladen","interaktiv","themen","schwere","piraten","künstler","tageslicht","teile","fertigkeit","erweiterungen","vegetarisch","straßenbahnen","gesponsert","tapete","requisiten","arabisch","randalieren","inspiriert","bilder","heilen","kämpfe","grüßen","prüfung","prisma","barriere","riff","erinnern","logistik","poesie","witze","linien","spritzen","schweiz","trophäe","begleiten","schöpfer","ursprung","getriebe","stahlarbeiter","delikt","daumen","unendlichkeit","tatsächlich","tropfen","deine","verknüpfungen","köstlich","laut","speisekarte","höhle","handgefertigt","handelt","inzwischen","herr","verfügbar","mürrisch","schneesturm","matze","akte","vergaser","experten","leistung","nicht","präsentation","kampf","trainer","buchstäblich","verhältnis","mischen","total","gezogen","stichwort","keile","informieren","inklusive","herrscht","ankunft","falken","umsatz","sporen","auszeit","bücher","konsole","deutsche","schutz","bewusstsein","entgiftung","schwierig","schlüssel","schicht","farbe","gartenarbeit","rallye","gastgeber","politik","schwimmen","freistil","klima","experte","preisgestaltung","oase","berühmt","wichtig","zucht","platzierung","impuls","geschenk","entworfen","elfmeter","bindung","kernschmelze","gespielt","aufklärung","riss","erreichen","zertifiziert","mittwoch","albtraum","ergebnisse","stabil","bewölkt","kritisch","einsen","versuch","bewegung","redaktion","miete","chaos","kampf","brüste","knallhart","verwaltung"],[0.345,0.599,0.178,-0.075,0.522,0.527,0.449,0.629,0.705,0.479,0.698,0.18,0.353,0.687,0.409,0.331,0.022,0.379,0.209,0.426,0.128,0.812,0.442,0.373,0.1,0.661,0.376,0.215,0.171,0.267,0.283,0.252,-0.038,0.528,0.534,0.51,0.183,0.762,0.307,0.113,0.459,0.207,0.144,0.561,0.347,0.705,0.489,0.332,0.232,0.69,0.943,0.495,0.823,0.052,0.518,0.333,0.186,0.894,-0.015,-0.062,0.804,0.155,0.607,0.345,0.007,0.561,0.518,0.564,0.047,0.373,0.368,0.033,0.34,0.466,0.049,0.618,0.321,0.419,0.238,0.412,0.087,0.115,0.268,0.104,0.338,0.213,0.673,0.319,0.4,0.082,0.162,0.295,0.455,0.18,0.357,0.096,0.177,0.628,0.285,0.476,0.434,0.174,0.264,0.225,0.606,0.305,0.709,0.295,0.529,0.167,0.125,0.13,0.358,0.475,0.529,0.361,0.333,0.117,0.272,0.248,0.23,0.335,0.216,0.336,0.321,-0.148,0.23,0.1,0.032,-0.017,0.22,0.089,0.099,0.301,0.104,0.014,0.158,-0.048,0.68,0.531,0.009,0.008,0.089,0.194,0.831,0.238,0.255,0.283,-0.035,0.242,0.164,0.305,0.145,0.4,0.286,0.073,0.322,-0.003,0.31,0.279,0.152,0.18,0.41,-0.05,0.605,0.514,0.384,0.502,0.464,0.163,0.67,0.101,0.371,0.396,-0.075,0.121,0.505,-0.029,0.31,-0.11,0.343,0.08,0.038,0.378,0.389,0.319,-0.069,0.281,0.268,0.161,-0.067,0.255,0.432,0.54,0.359,0.307,0.182,-0.069,0.28,0.476,0.022,0.186,0.083,0.392,0.841,0.724,0.612,-0.003,0.483,0.341,0.641,-0.022,0.643,0.357,0.434,0.608,0.145,0.356,0.817,0.836,0.77,0.189,0.273,0.783,0.168,0.376,0.273,0.091,0.415,-0.007,0.18,0.488,0.494,0.031,0.362,0.425,0.487,0.22,0.284,0.054,0.246,0.237,0.089,0.015,-0.11,0.381,0.094,0.305,0.719,0.19,0.02,0.043,0.176,0.736,0.544,0.29,0.268,0.364,0.216,0.084,0.189,0.415,0.134,0.173,0.423,0.845,0.15,0.46,0.041,0.708,0.599,0.497,0.103,0.59,0.192,0.489,0.476,0.206,0.252,0.28,0.01,-0.05,0.072,0.535,0.459,0.152,0.132,0.636,0.23,0.644,0.03,0.217,0.154,0.361,0.085,0.233,0.114,0.1,0.351,0.483,0.017,0.062,0.264,0.233,0.202,0.655,0.526,0.286,0.322,0.306,0.652,0.258,0.545,0.329,0.079,0.235,0.215,0.545,0.288,-0.018,0.614,0.247,0.171,0.069,0.378,-0.006,0.385,0.417,0.131,0.541,0.244,0.447,0.261,0.24,0.485,0.053,0.449,0.278,0.107,0.127,0.132,0.223,0.216,0.438,0.193,0.189,0.228,0.451,0.7,0.45,0.176,0.036,0.19,0.208,0.405,0.267,0.211,0.557,0.144,0.303,0.089,0.021,0.276,0.349,-0.066,0.564,0.295,0.292,-0.012,0.103,0.147,0.289,0.549,0.357,0.089,0.239,0.355,0.356,-0.043,0.109,0.335,0.191,0.417,0.082,0.706,0.591,0.831,0.325,0.272,0.206,0.35,0.221,0.161,0.347,-0.001,0.311,0.153,0.247,0.213,0.185,0.501,-0.009,0.575,0.311,0.688,0.309,0.122,0.353,0.304,0.135,-0.076,0.004,-0.002,0.098,0.027,0.167,0.091,0.278,0.181,0.107,0.537,0.266,-0.034,0.304,0.186,0.5,-0.11,-0.052,-0.104,0.361,0.391,0.168,0.19,0.12,0.034,0.341,-0.048,0.092,0.374,0.27,0.482,0.225,0.279,0.008,0.387,0.078,0.355,0.218,0.348,0.464,-0.182,0.193,0.514,0.272,0.164,0.436,0.359,0.528,0.208,0.223,0.342,0.509,0.632,0.078,0.078,0.316,0.118,0.728,0.29,0.28,0.262,0.208,0.139,0.322,0.441,0.152,0.321,0.027,0.18,0.185,0.113,0.202,0.584,0.778,0.015,0.21,0.146,-0.098,0.068,0.067,0.565,0.319,0.113,0.314,0.053,0.45,-0.019,-0.049,0.086,0.142,0.295,0.003,0.087,0.181,0.719,0.044,0.09],[2131,694,892,443,648,926,4282,1314,7041,306,899,1058,495,3807,1573,846,1636,431,6005,2577,716,2089,472,2633,475,1294,550,1164,1159,1048,5297,2214,2273,1206,16659,1050,383,326,495,959,1006,604,343,1463,1365,1155,727,5517,605,3494,5947,976,744,581,1305,1746,1457,1962,1828,818,4581,4777,931,2888,1558,561,2047,317,823,828,308,627,724,628,319,1457,389,565,3001,601,1185,545,959,899,772,381,1997,420,845,691,829,1242,3984,705,500,938,3615,1386,880,823,3585,2372,591,320,1101,569,910,1122,5715,6541,1555,332,666,3812,759,463,2964,775,744,666,2402,528,662,1119,337,410,5407,2185,3362,449,321,392,5912,9847,470,726,312,1530,560,7347,9281,1036,733,799,1523,410,603,844,8658,512,493,1320,342,507,840,371,355,1018,2332,478,480,1163,726,1481,1804,575,1992,1506,3507,1206,1792,674,481,1802,2745,829,3748,955,344,403,1046,411,1135,5039,1159,627,1076,1340,887,1027,1158,1869,1723,432,607,1466,1315,931,2093,2364,3267,744,3542,1475,3984,1504,1174,1100,637,820,1033,313,847,2734,371,814,856,1378,406,1970,6197,1090,1723,4331,1454,2751,498,3979,629,354,945,326,555,456,500,3310,4180,469,1129,383,380,854,328,642,315,518,822,739,1337,2026,652,1258,743,3194,1209,954,1504,763,1392,495,550,2481,461,363,973,313,2622,14804,414,6521,832,433,2340,643,306,783,342,762,1498,963,1101,932,383,764,570,744,358,507,554,877,300,893,545,534,533,1112,659,622,2005,551,441,360,778,675,869,819,632,366,630,1701,765,786,374,1036,614,333,305,925,321,417,758,304,705,553,385,1062,521,451,402,695,409,327,652,318,2055,372,784,479,327,311,1259,486,447,938,570,352,501,891,369,490,367,466,366,392,432,633,428,347,362,669,384,490,414,347,354,428,407,449,582,315,361,348,1148,519,672,841,746,441,464,582,949,665,576,1041,464,669,459,1452,528,719,342,588,847,544,484,407,1236,501,493,331,333,351,695,1206,308,671,888,1664,757,1784,491,606,302,528,763,610,751,406,547,306,329,344,534,654,502,575,737,441,320,404,440,681,306,777,625,401,311,761,360,828,488,490,392,512,308,503,467,313,334,745,308,338,358,419,317,480,670,355,330,343,329,657,405,443,422,350,371,387,414,320,313,689,439,1083,344,411,445,560,500,445,580,649,511,613,322,374,424,341,378,353,346,469,321,335,594,556,544,536,428,361,525,332,481,304,319,304,445],[327,330,1765,8,190,89,1313,1340,277,209,1734,32,423,676,1485,133,478,3,929,2029,31,191,277,128,5,529,4,334,204,28093,533,1385,7763,24,1146,147,29,215,406,24,190,8,505,158,3,321,207,1328,539,5685,7310,139,9,73,37,2770,665,2845,55,52,125,11,2883,6,396,7,21,38,524,566,757,1238,330,1339,254,682,623,1135,1659,936,5601,1160,150,1028,479,481,29,774,1755,30,37,85,7516,1823,803,142,3078,1166,2154,3296,51,422,448,96,124,203,923,1407,5,4,3472,97,37,519,604,635,323,491,467,4774,3656,408,3383,1292,694,16172,145,164,574,47,370,502,30,873,16,1255,60,109,16,815,1805,238,153,18,344,214,92,2556,17,71,423,1764,182,296,106,32,36,55,4046,928,312,4,324,101,16,21,1210,146,808,687,168,486,32,2819,102,80,97,47,2819,8794,575,17,2050,52,220,9,164,38,3,2215,21,202,541,2652,219,136,668,3,794,238,90,508,9,55,null,117,78,77,5452,298,44,645,40,5,207,371,121,59,700,834,145,23,1476,871,1657,1459,3203,37,17,125,979,727,19,694,884,435,22,12,7,686,2578,1131,438,1453,37,559,187,395,408,33,13,72,640,2510,516,1091,2439,17080,313,147,1476,851,27,1722,837,5752,1729,12,265,152,120,1600,36,762,142,215,492,25,38,386,1884,96,49,23,420,326,6817,378,45,183,1118,2644,380,392,777,852,15,7039,1380,1756,172,23,191,234,26,60,1166,1591,135,93599,88,8,902,766,202,96,49,111,455,133,168,30,162,62,386,58,298,495,36,27,1702,78,362,76,681,16,62,7,10399,183,3,220,2,2162,1071,436,7334,80,38,1175,112,257,378,101,620,731,1430,644,129,839,13339,25,29,53,2023,1314,55,35,416,84,4841,989,3454,1090,63,280,139,3475,415,429,1106,60,1157,null,154,311,20,121,37,10,75,21,350,6470,88,254,363,572,94,18,75,862,541,88,296,178,35,3656,67,316,48,101,35,1,7,1504,28,3063,99,10347,25,152,5002,58,256,6,603,1488,3474,3407,2,33,292,101,11,1777,1478,195785,1413,2087,1726,24,365,174,3996,601,364,null,777,1121,340,351,63,715,8,286,1479,577,7817,735,132,12,1037,517,130,1096,30,205,313,3383,408,39,603,836,20,55,130,4124,34,87,217,799,85,284,99,30,3316,349,52,1430,136,2929,139,1453,372,83,340,17,1625,665,648,160,1039,2087,207,50,436],[1.874,0.743,-0.682,4.014,1.227,2.342,1.182,-0.02,3.235,0.381,-0.657,3.498,0.157,1.728,0.058,1.85,1.23,4.967,1.866,0.239,3.14,2.392,0.533,3.024,4.554,0.895,4.924,1.248,1.737,-3.289,2.296,0.469,-1.228,3.917,2.677,1.966,2.581,0.416,0.198,3.688,1.667,4.324,-0.387,2.226,6.12,1.28,1.256,1.424,0.116,-0.487,-0.206,1.949,4.415,2.074,3.563,-0.462,0.784,-0.372,3.504,2.756,3.601,6.074,-1.13,6.177,1.37,4.384,4.58,2.121,0.451,0.38,-0.899,-0.68,0.786,-0.757,0.228,0.759,-0.471,-0.698,0.593,-0.443,-1.553,-0.755,1.855,-0.134,0.477,-0.233,4.232,-0.611,-0.731,3.137,3.109,2.682,-0.635,-0.95,-0.474,1.888,0.161,0.173,-0.895,-1.388,4.253,1.726,0.277,1.204,2.184,1.031,-0.014,-0.226,7.041,7.4,-0.803,1.23,2.89,1.994,0.228,-0.316,2.217,0.456,0.466,-1.97,-0.42,0.258,-1.631,-0.144,-0.722,-3.675,3.619,2.59,1.768,2.257,-0.142,-0.247,5.284,2.423,3.38,-0.547,1.649,2.642,3.555,2.199,1.637,1.471,1.567,3.793,1.488,0.65,1.88,-1.108,6.233,1.976,0.153,-0.29,0.631,0.538,2.07,2.45,2.289,2.918,-0.551,-0.663,0.431,5.672,0.807,2.685,4.725,3.31,0.499,2.334,1.468,0.563,2.367,0.327,2.71,-0.447,3.293,2.338,3.654,3.012,-2.103,-3.083,0.598,3.185,-0.591,4.574,1.662,4.244,1.881,3.563,5.689,-0.769,4.01,2.225,1.158,-1.815,1.019,2.378,0.677,5.738,0.969,2.296,3.592,0.382,5.975,3.289,null,2.554,2.711,2.659,-2.147,1.012,3.156,-0.723,3.053,6.304,0.583,0.786,1.956,3.151,-0.545,0.86,3.755,3.858,0.155,1.604,-0.131,0.634,-1.861,4.678,3.611,1.041,-0.035,-0.802,3.375,-0.42,-0.57,2.029,5.247,3.666,5.083,-0.583,-1.915,-0.281,-0.289,-0.817,2.142,-0.076,1.481,0.626,1.187,4.117,3.915,2.861,0.149,0.241,0.851,-0.134,-0.483,-3.108,1.492,1.214,-0.987,1.07,2.838,-1.557,0.151,-2.911,0.416,7.118,0.446,3.759,1.936,-1.307,4.174,-0.17,0.768,1.292,-0.364,3.417,3.674,0.914,-0.537,2.273,2.056,3.503,0.305,0.825,-2.947,0.294,2.511,1.567,-1.316,-1.085,0.361,0.309,-0.377,0.266,3.783,-2.426,0.374,-1.159,0.942,2.751,1.404,1.059,3.509,2.614,-0.612,-1.469,1.54,-4.008,2.163,4.588,-0.88,0.302,1.112,1.244,1.828,2.12,-0.349,1.143,1.507,2.316,1.471,2.188,-0.003,2.907,0.559,-0.093,2.413,3.248,-1.426,1.433,0.588,1.431,1.104,3.146,2.537,4.226,-3.46,0.53,6.039,0.793,5.409,-0.835,-0.631,-0.214,-2.684,2.41,2.273,-0.875,1.187,0.595,-0.032,1.356,-0.361,-0.144,-1.206,-0.618,1.032,-0.226,-3.548,2.976,2.659,1.879,-1.743,-1.122,2.001,2.552,0.336,1.322,-2.596,-1.044,-1.102,-0.742,2.367,1.1,1.68,-2.064,0.112,0.305,-0.153,2.405,-0.697,null,1.103,0.766,3.133,2.485,2.658,4.275,1.517,3.332,0.884,-2.476,1.705,0.471,1.225,-0.133,1.657,2.912,1.491,-0.898,0.25,2.618,0.04,1.327,3.234,-0.787,2.425,1.731,2.325,1.792,2.155,6.269,4.691,-0.902,3.289,-2.021,1.709,-3.521,2.577,0.817,-2.237,2.423,0.673,4.563,0.201,-1.216,-2.385,-2.132,5.394,3.027,0.047,2.04,4.04,-1.489,-1.559,-5.55,-1.367,-0.924,-1.263,3.016,0.071,1.079,-2.563,-0.178,0.249,null,-0.844,-0.409,-0.099,-0.038,1.737,-0.534,3.679,0.518,-0.792,-0.486,-3.165,-0.762,0.913,4.003,-0.94,-0.154,1.177,-1.141,2.515,0.635,0.28,-2.358,-0.265,2.872,-0.317,0.259,2.845,2.011,1.231,-1.997,2.688,1.632,0.983,-0.208,1.794,0.769,1.179,2.523,-2.057,-0.023,1.984,-1.399,0.934,-1.832,0.837,-1.467,0.468,1.902,0.47,3.451,-1.334,-0.611,-0.21,0.73,-0.77,-1.926,0.432,1.805,0.02]],"container":"<table class=\"display\">\n <thead>\n <tr>\n <th> <\/th>\n <th>eng<\/th>\n <th>ger<\/th>\n <th>cos_sim<\/th>\n <th>freq_eng<\/th>\n <th>freq_ger<\/th>\n <th>log_or<\/th>\n <\/tr>\n <\/thead>\n<\/table>","options":{"pageLength":1000,"scrollY":"250px","columnDefs":[{"className":"dt-right","targets":[3,4,5,6]},{"orderable":false,"targets":0}],"order":[],"autoWidth":false,"orderClasses":false,"lengthMenu":[10,25,50,100,1000]}},"evals":[],"jsHooks":[]}</script> ] - English-German lexical pairs with high cosine similarity values: English borrowing means more or less the same as the German lexical item - English-German paris with low cosine similarity values: Borrowing has undergone semantic shift --- ### Change in meaning of borrowed words  -- - Very weak correlation between frequency of English-German log odds and cosine similarity... **BUT** --- ### Change in meaning of borrowed words  - Only types for which the German lexemes occur at least 100 times --- ### Change in meaning of borrowed words  - Only types for which the English and German lexemes occur at least 300 times --- ### 3,845 common German words and 227 common English borrowings <div class="midcenter"> <iframe src="https://cc.oulu.fi/~scoats/Brno_CS3.html" style="max-width = 100%" sandbox="allow-same-origin allow-scripts" width="100%" height="400" scrolling="yes" seamless="seamless" frameborder="0" align="middle"> </iframe> </div> .small[200 dimensions reduced to 2 with t-SNE (van der Maaten & Hinton 2008) Blue: German word types. Yellow: English word types. Green: German lexical equivalents of the English types] --- ### Summary - Large corpora of messages containing borrowings or code-switches can be created from social media (Twitter) - Word list methods can be used to identify messages with borrowings or code-switches - Word embeddings may be able to shed light on semantic shift of lexical borrowings --- ### Issues to address - "Contaminated" word lists - Manually correct word lists, use stemmers - Inaccurate translations (polysemy, case syncretism) -- - Use "artificial codeswitching" sentences to train the embedding models (Gouws and Søgaard 2015, Wick et al. 2016) - Use WordNet and German WordNet to more accurately compare semantic fields - Consider English borrowings in more than one language (cf. Görlach 2001) --- #Thank you! Dekuji! Danke! --- ### References I .small[ .hangingindent[ Bird, S., Loper, E. and Klein, E. 2009. *Natural Language Processing with Python*. Newton, MA: O'Reilly. Dave, H. (2016). [FrequencyWords](https://github.com/hermitdave/FrequencyWords/tree/master/content/2016/de). van der Maaten, L. and Hinton, G. 2008. Visualizing high-dimensional data using t-SNE. *Journal of Machine Learning Research* 9: 2579–2605. Faruqui, M., and Padó, S. 2010. Training and evaluating a German named entity recognizer with semantic generalization. In *Proceedings of Konvens 2010*. Saarbrücken, Germany. Görlach, M. (ed.). 2001. *A Dictionary of European Anglicisms*. Cambridge, UK: Cambridge University Press. Gouws, S. and Søgaard, A. 2015. Simple task-specific bilingual word embeddings. *Human Language Technologies: The 2015 Annual Conference of the North American Chapter of the ACL*, 1386–1390. Harris, Z. 1968. *Mathematical Structures of Language*. New York: Interscience. Lison, P. and Tiedemann, J. 2016. OpenSubtitles2016: Extracting large parallel corpora from movie and TV subtitles. *Proceedings of the 10th International Conference on Language Resources and Evaluation (LREC 2016)*, 923–929. Manning, C. D., Surdeanu, M., Bauer, J., Finkel, J., Bethard, S. J. and McClosky, D. 2014. The Stanford CoreNLP natural language processing toolkit. *Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics: System Demonstrations*, 55–60. Mikolov, T., Yih, W. and Zweig, G. 2013. Linguistic regularities in continuous space word representations. *Proceedings of HLT-NAACL 13*, 746–751. ]] --- ### References II .small[ .hangingindent[ Myers-Scotton, C. 1997. Code-switching. In *The Handbook of Sociolinguistics*, ed. Florian Coulmas, 212–237. Oxford: Blackwell. Onysko, A. 2007. *Anglicisms in German: Borrowing, lexical productivity, and written codeswitching*. Berlin/New York: De Gruyter. Řehůřek, R. and Sojka, P. 2010. Software framework for topic modelling with large corpora. *Proceedings of the LREC 2010 Workshop on New Challenges for NLP Frameworks*, 45–50. Roesslein, J. 2015. [Tweepy](https://github.com/tweepy/tweepy). Schmid, H., Fitschen, A. and Heid, U. (2004). SMOR: A German computational morphology covering derivation, composition, and inflection. *Proceedings of the 4th International Conference on Language Resources and Evaluation (LREC 2004)*, 1263–1266. Schreiber, J. 2017. [A German word list for GNU Aspell](https://sourceforge.net/projects/germandict). Wick, M., Kanani, P. and Pocock, A. 2016. Minimally-constrained multilingual embeddings via artificial code-switching. *Proceedings of the 30th AAAI Conference on Artificial Intelligence (AAAI-16)*, 2849–2855. ]]